Leading up to the release of Visual Studio 2010 and Microsoft .NET Framework 4.0, there have been a few posts about how C# 4 now has parity with Visual Basic with regards to the ability to use Optional Parameters. Unfortunately, some of the examples that demonstrate this feature are… well… somewhat disturbing to me from a code quality perspective. In addition there is a lot of talk about how ‘cool’ this feature may appear (from 60,000 feet!), but there is little discussion about recommended guidelines for using Optional Parameters and when it is safe to do so.

Let’s start with the code analysis rule CA1026: Default parameters should not be used. Even in Visual Studio 2010, this rule still exists! Previously a large argument against using Optional Parameters was that code in VB.NET could use them but code in C# would not be able to use them. (This was especially annoying with COM interop!) Before C# 4, a C# coder would have to explicitly provide an argument for each Optional Parameter that was defined (annoying). While this is no longer a concern for C# coders, it is however still valid for consideration when creating methods that may be called from other .NET languages that don’t support the usage of Optional Parameters.

Recently this video on Named and Optional Parameters was released. While the author does a reasonable job conveying the essentials, the scenario is simply a code smell. From a clean code perspective, instead of passing a bunch of search criteria parameters to the ‘Search’ method, the SOLID principles should be used and the criteria should extracted into its own class… This would then mitigate the need for optional parameters in the first place.

In ScottGu’s blog post Optional Parameters and Named Arguments in C# 4 (and a cool scenario w/ ASP.NET MVC 2) [respect to ScottGu btw for your many good works :)], the “cool scenario” replaces a Nullable parameter named ‘page’ with an optional parameter that has a default value, citing that the Optional Parameter and default value on this public method essentially makes the behaviour of the method expressed “more concisely and clearly”.

Now, I agree that the code may appear slightly more ‘concise’, but I think it is quite arguable that it is more ‘clear’.

Side note: is ‘page’ a 1-based number or a 0-based index? Isn’t a page in the real world usually a 1-based number? e.g. “Page 1 of the search results”. In the spirit of the example code, perhaps the parameter really should be renamed to “pageIndex” - I think that would make the method more clear…

Getting back on track though: by inserting a default value into the method signature, a whisper of the internals of the business logic of this method is hard-coded into the public API.

Read that again… there is subtlety in there that leaves the feature of Optional Parameters open to unwittingly known abuse by the majority of the population! And it is the lack of clarity due to this subtlety that greatly concerns me from a code quality perspective.

What are the subtle caveats?

-

As per the CLI specification, the default value must be a compile-time constant value.

-

When compiled, the default value is emitted into a DefaultParameterValue attribute as metadata on the parameter within the method signature.

-

When the compiler is compiling the code that calls the method, the compiler reads this value from the DefaultParameterValue attribute and actually hard-codes that value into the IL at the call-site. In other words, every line of code throughout all applications that call that method have this value hard-coded in their calling assembly.

As an example, if you are using say Version 1.0 of the assembly with the method which specifies a default value of 25 for the parameter, your code will be compiled and have the value of 25 hard-coded into your assembly. If you upgrade to say Version 1.1 of the assembly with the method (which now specifies a default value of 26 instead of 25), and if you do not recompile your code, your code when executed will pass the method a value of 25. The public API has been broken, and almost everyone would be unaware!

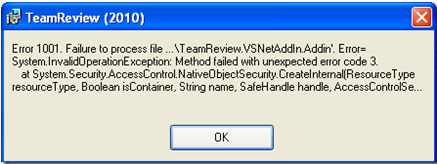

(I found that this information is described in much more detail in C# 4.0 FEATURE FOCUS - PART 1 - OPTIONAL PARAMETERS and due to my concern about the age of the post I actually verified it myself with the help of Reflector…)

Anyone else flashing back to why “public [static] readonly” values should be used instead of “const”? It’s the same argument! The const value is treated as a literal and is hard-coded into each assembly that uses the value. (Conveniently, for more information, you can read THE DIFFERENCE BETWEEN READONLY VARIABLES AND CONSTANTS)

- Alternatively, if you as the caller of the method were to actually name the parameter in your calling code, and if you upgraded to the next version of assembly with the method which has had the parameter renamed, your code will not compile! Why? Because when using Named Parameters, the parameter name itself actually needs to be considered part of your API!

What should be the guidelines for using Optional and Named Parameters?

In general, I would suggest that Optional Parameters (just like the usage of the const keyword) should only ever be used with constant values that will never ever change over time - for example, mathematical constants… like the value of PI (although I don’t know why you would make PI an Optional Parameter… but you get the idea nevertheless). Generally and unfortunately however this will typically exclude any ‘constant-like’ values in your business domain. Values in business domains, while seemingly constant for now still can change over time as the business changes!

As always there are going to be a few perspectives on this. I find that it is useful to categorise development into two areas: (a) application development, and (b) reusable library development.

Especially when developing reusable library code, extreme care needs to be taken when creating and maintaining public APIs. I would suggest that Optional Parameters should NOT be used in public APIs of reusable library code… instead revert to the usage of method overloading, or redesigning your API.

However, in application development, where all the code is highly likely to be recompiled (and often), perhaps it isn’t so bad to use the Optional Parameters?… that is, until a team member decides to clean up the code by doing some refactoring and move that method into a reusable library… and then it all begins!

Summary

So for safety, clarity, simplicity and consistency across all code-bases (especially with the widely varying technical capabilities of developers), perhaps the best practice guidance for using Optional and Named Parameters should be:

- Never use Optional Parameters in public methods; and

- Only use Optional Parameters with default values that are constant in time (like mathematical constants and not apparent business domain constant values).