Logic Apps and the Service Bus Connector - The Case of the Incomplete Message!

The Symptom

The other day I tracked down an issue my team was seeing with an Azure App Service Logic App. The Logic App was being triggered by an Azure Service Bus Connector that was configured to work with a Service Bus Topic. With a message published to the Service Bus Topic, an instance of the Logic App would execute successfully — as expected on the trigger’s configured schedule. But then, unexpectedly, on the next scheduled time period, often a new instance of the Logic App would execute and reprocess the exact same message from the Service Bus Topic. It was as if the Service Bus Topic message was never successfully “completed” and thus removed from the Topic.

What You Need To Understand

In order to understand why the same Service Bus Topic message was being processed twice, this is what you need to know.

-

The Service Bus Connector operates in ReceiveMode.PeekLock, so it is not receiving and immediately deleting the message from the Topic in one atomic call like in ReceiveMode.ReceiveAndDelete. In PeekLock mode, two calls to the Service Bus must be made. The first call is to the Receive method, and the Service Bus puts a lock on the message for a specific lock duration. The default LockDuration for a Queue or Subscription is 1 minute, and currently that cannot be configured in the Service Bus Connector. Then a second call, to the Complete method, is required to mark that message as processed and delete it from the Service Bus.

-

After a call to a Connector’s trigger method returns a result indicating that data is available to be processed and that a Logic App instance should be created and executed, the Logic App trigger mechanism immediately calls the Connector’s trigger method again. This logically is being done in case there is even more data to be processed. This makes sense because if there is more data available to be processed, one typically would want that data being processed as soon as possible, and would not want to wait for the next scheduled trigger time period.

-

Connectors can have their own specified triggerState data passed from one call of their trigger method to subsequent calls of their trigger method.

-

The Service Bus Connector passes the message’s unique LockToken as triggerState from the first successful trigger invocation to its next trigger call that runs immediately after it.

-

When the Service Bus Connector trigger method is executed, one of the first things that it does is to determine if the triggerState data has been provided. If the triggerState data has been provided, the Service Bus Connector calls the Complete method of the message represented by that given triggerState/LockToken. Only after doing that will it attempt to receive the next message from the configured Topic or Queue.

The Cause

Now that we understand how the Complete method is called as part of the Service Bus Connector’s trigger mechanism, it is easy to understand the cause of the problem.

If the second call to the trigger fails (the call that would complete the message), for instance, because of a transient error on the gateway, then the Service Bus message will not be completed — until perhaps the next scheduled trigger.

However, after the default lock duration of 1 minute expires, the lock is lost and Service Bus makes the message available to be received again.

This is exactly what happened — the message was not completed and when the next scheduled trigger executes, the same message is processed again.

Proof — The Smoking Trigger

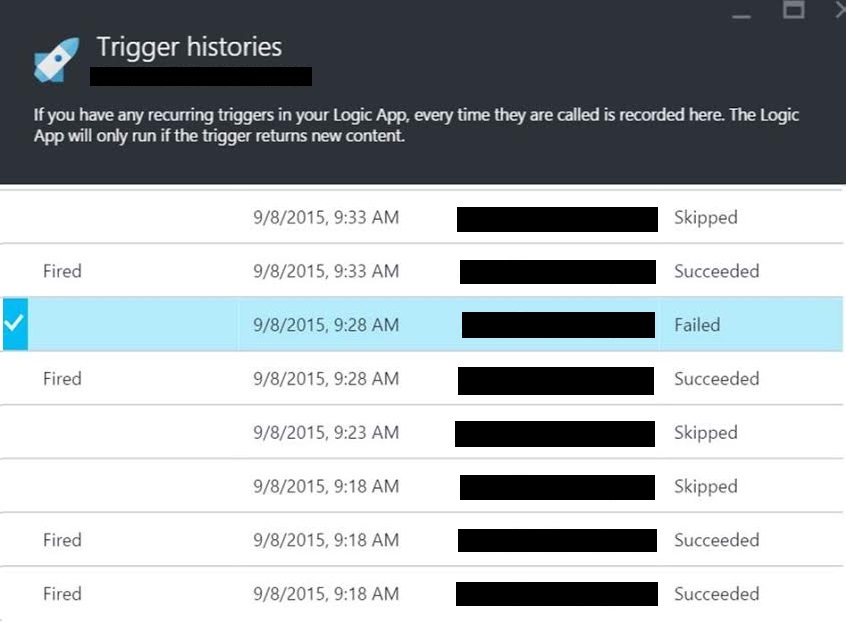

From the Trigger History of the Logic App, we can see the trigger that failed in the image below.

The call to this trigger returned a 502 “Bad Gateway” error.

1502 - Web server received an invalid response while acting as a gateway or proxy server.

The full JSON of the output message for the failed trigger is:

1{

2 "headers": {

3 "date": "Mon, 07 Sep 2015 23:28:32 GMT",

4 "server": "Microsoft-IIS/8.0"

5 },

6

7 "body": "<!DOCTYPE html PUBLIC \"-//W3C//DTD XHTML 1.0 Strict//EN\" \"http://www.w3.org/TR/xhtml1/DTD/xhtml1-strict.dtd\">\r\n<html xmlns=\"http://www.w3.org/1999/xhtml\">\r\n<head>\r\n<meta http-equiv=\"Content-Type\" content=\"text/html; charset=iso-8859-1\"/>\r\n<title>502 - Web server received an invalid response while acting as a gateway or proxy server.</title>\r\n<style type=\"text/css\">\r\n<!--\r\nbody{margin:0;font-size:.7em;font-family:Verdana, Arial, Helvetica, sans-serif;background:#EEEEEE;}\r\nfieldset{padding:0 15px 10px 15px;} \r\nh1{font-size:2.4em;margin:0;color:#FFF;}\r\nh2{font-size:1.7em;margin:0;color:#CC0000;} \r\nh3{font-size:1.2em;margin:10px 0 0 0;color:#000000;} \r\n#header{width:96%;margin:0 0 0 0;padding:6px 2% 6px 2%;font-family:\"trebuchet MS\", Verdana, sans-serif;color:#FFF;\r\nbackground-color:#555555;}\r\n#content{margin:0 0 0 2%;position:relative;}\r\n.content-container{background:#FFF;width:96%;margin-top:8px;padding:10px;position:relative;}\r\n-->\r\n</style>\r\n</head>\r\n<body>\r\n<div id=\"header\"><h1>Server Error</h1></div>\r\n<div id=\"content\">\r\n <div class=\"content-container\"><fieldset>\r\n <h2>502 - Web server received an invalid response while acting as a gateway or proxy server.</h2>\r\n <h3>There is a problem with the page you are looking for, and it cannot be displayed. When the Web server (while acting as a gateway or proxy) contacted the upstream content server, it received an invalid response from the content server.</h3>\r\n </fieldset></div>\r\n</div>\r\n</body>\r\n</html>\r\n"

8}

The Remedy

This situation should not happen often, but unfortunately it was occurring multiple times per day, and only ever on the second call to the trigger method. The only real solution is to follow Microsoft’s advice and design your systems to be idempotent, because it can and occasionally will happen.

In addition to that, to reduce the frequency of the situation, hopefully Microsoft will release a change in the near future so that failed calls to the trigger method are automatically retried.